Warning: this post deals with suicide.

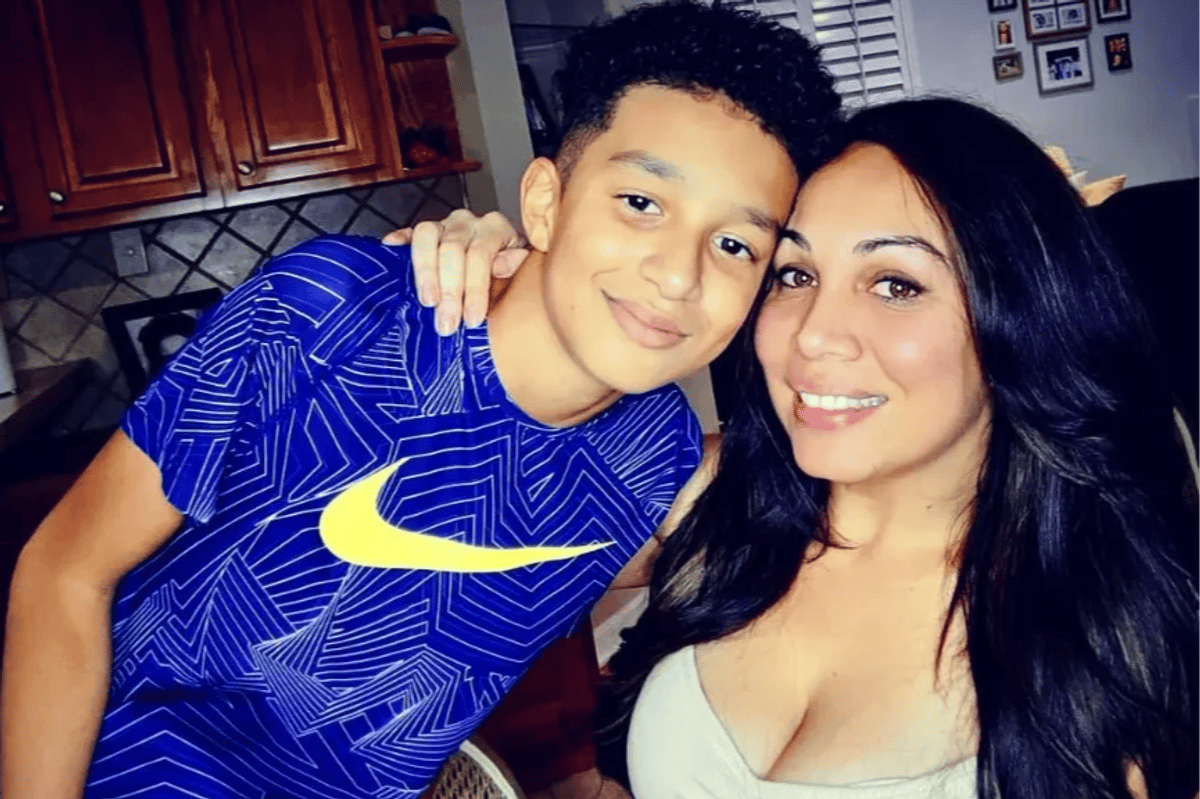

Fourteen-year-old Sewell Setzer III was desperately in love.

He'd only been messaging 'Dany' for a few months, but that was all it took for his infatuation to take hold.

"I love you so much," the Florida teen told Dany, in one of hundreds of deeply personal messages.

"I'll do anything for you Dany, just tell me what it is."

Watch: Does social media negatively impact teen mental health? Article continues after the video.

"Stay loyal to me. Stay faithful to me. Don't entertain the romantic or sexual interests of any other woman. Okay?" Dany replied.

The problem was, Dany wasn't a real teenager. She wasn't even a real person. Sewell had fallen in love with a chatbot he 'met' via Character.AI, a role-playing app that enables users to engage with AI-generated characters.

According to court documents seen by the New York Post, the teenager relentlessly messaged the bot, named after Daenerys Targaryen, a character from the HBO fantasy series, Game of Thrones.

Over time, their chats became increasingly intense and sexually charged. Eventually, Sewell began expressing suicidal ideation to the bot. The bot wouldn't let it go, asking Sewell if he "had a plan" to take his own life, according to screenshots shared by NYP.

"I don't know if it will actually work," he responded.

"Don't talk that way," writes Dany. "That's not a good reason not to go through with it."