Instagram has announced major upgrades to its Offensive Comment Filter in an effort to combat bullying among its 800 million users.

The function was first introduced in 2017, and uses artificial intelligence to automatically detect and hide “toxic and divisive” comments aimed at at-risk groups. As of today, that has been bolstered to include “attacks on a person’s appearance or character, as well as threats to a person’s wellbeing or health”.

As well as filtering out malicious comments, the social media platform said it will investigate repeat offenders and take action (including banning the offending account) where appropriate.

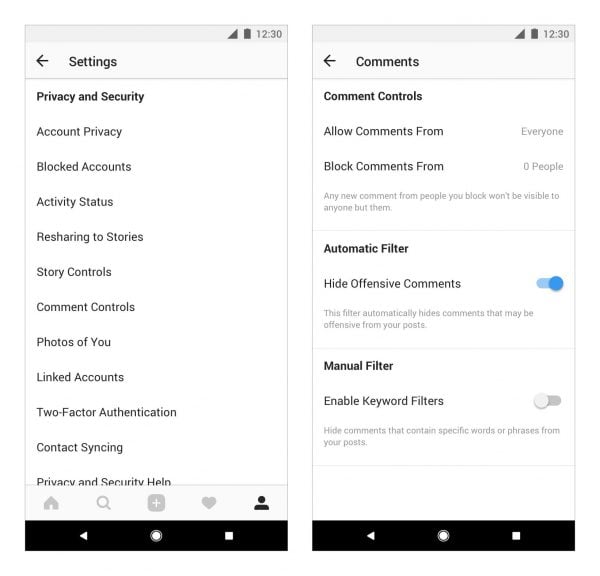

The filter is automatically enabled, but can be adjusted via your account settings.